As data centers become integral to the digital world, their power usage and heat generation present unique challenges. Managing temperature and humidity in these facilities is essential to maintaining equipment performance, minimizing downtime, and extending the lifespan of costly hardware. Let’s dive into how data centers achieve efficient cooling, the technologies involved, and why effective cooling strategies are critical.

Data centers house servers, storage devices, and networking hardware that generate significant heat. Without proper cooling, the following risks emerge:

Equipment Damage: Excessive heat and humidity can degrade sensitive hardware, leading to malfunctions and reduced lifespan.

Operational Downtime: Overheated equipment may fail, disrupting critical operations and causing financial losses.

Safety Hazards: Heat buildup increases the risk of fires, endangering equipment, personnel, and facility operations.

With cooling systems consuming up to 33% of a data center’s energy, selecting the right solutions balances cost-effectiveness with operational efficiency.

Below are a few of the major cooling methods, highlighting their benefits and applications while outlining how they fit into the requirements of a modern data center.

Air cooling remains a cornerstone in data center design, particularly for smaller or legacy facilities. Its enduring popularity stems from its relative simplicity and cost-effectiveness. Two primary techniques underscore this method:

To optimize airflow, server racks are arranged to create alternating hot and cold aisles. Cold air is directed into the front of equipment racks through dedicated cooling systems, while hot air is expelled from the rear into designated hot aisles. Barriers, such as doors and partitions, are strategically placed to prevent the mixing of hot and cold air streams, a critical measure that significantly improves cooling efficiency. By channeling air more effectively, this approach enhances thermal control and reduces energy waste, making it a staple in small to medium-scale data centers.

As data center densities rise, traditional air cooling can fall short in addressing the intense heat generated by high-performance computing systems. Liquid cooling emerges as a superior alternative, offering enhanced thermal management and energy efficiency. Two prominent techniques define this category:

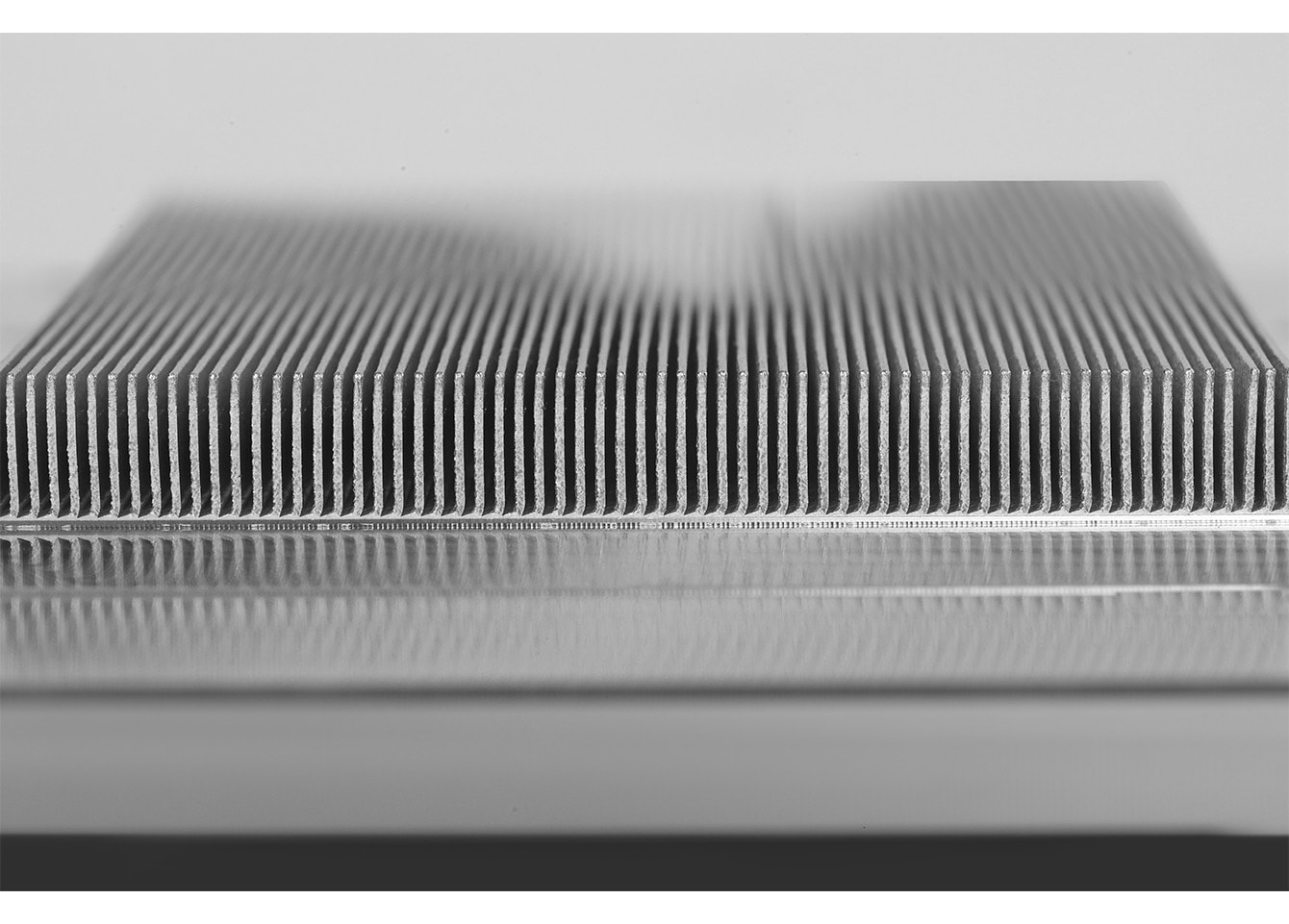

In this method, a specialized coolant is delivered directly to critical components such as processors or GPUs through embedded tubes. By capturing heat at its source, direct-to-chip cooling prevents thermal buildup, allowing high-powered devices to operate at optimal performance. This approach is particularly beneficial for edge computing and high-density data centers where heat dissipation is paramount.

An innovative and highly efficient solution, liquid immersion cooling involves submerging hardware in a non-conductive dielectric fluid. This fluid absorbs heat directly from components, eliminating the need for fans and other traditional cooling mechanisms. In addition to drastically reducing energy consumption, this method enhances the lifespan of hardware by mitigating temperature fluctuations and mechanical wear.

In cooler climates, free cooling offers a sustainable alternative by utilizing ambient air to regulate data center temperatures. Several variations of free cooling have gained traction due to their environmental benefits and reduced operational costs:

This technique draws in cold external air and circulates it through the data center while expelling hot air outside. By taking advantage of natural temperature differences, airside economization minimizes reliance on mechanical cooling systems, reducing energy consumption significantly.

A more advanced form of free cooling, Kyoto cooling employs a rotating thermal wheel to separate and manage hot and cold air streams efficiently. This approach boasts up to 92% energy savings compared to conventional methods, making it a favored choice for facilities prioritizing sustainability.

Energy efficiency is a critical factor in data center operations, given that cooling often represents a significant portion of energy costs. Strategies to improve energy efficiency include:

Regular Maintenance: Ensuring HVAC and power systems are in good repair.

Upgraded Equipment: Replacing outdated hardware with energy-efficient alternatives.

Smart Monitoring: Employing sensors, AI, and robotics for precision cooling.

Future Trends in Data Center Cooling

Future Trends in Data Center Cooling

As the demand for data centers grows, future cooling innovations aim to further reduce energy consumption and environmental impact. Emerging trends include:

Solar Cooling: Converts solar energy into cooling power, making it an eco-friendly supplement to traditional systems.

Smart Robotics: Autonomous robots equipped with sensors can monitor and manage temperature fluctuations across server racks.

Hybrid Systems: Combining air, liquid, and natural cooling methods to optimize performance and sustainability.

The choice of cooling technology depends on several factors, including facility size, location, budget, and equipment density. High-density facilities may benefit from liquid immersion cooling, while smaller setups can rely on air cooling systems like CRAC units.

Data center cooling is a dynamic and evolving field, with solutions ranging from traditional air cooling to advanced AI-driven systems. By adopting energy-efficient strategies and leveraging emerging technologies, data centers can ensure optimal performance, lower costs, and contribute to a greener future. Whether through geothermal innovations or smart temperature controls, the future of data center cooling promises efficiency, sustainability, and resilience.

By continuing to use the site you agree to our privacy policy Terms and Conditions.